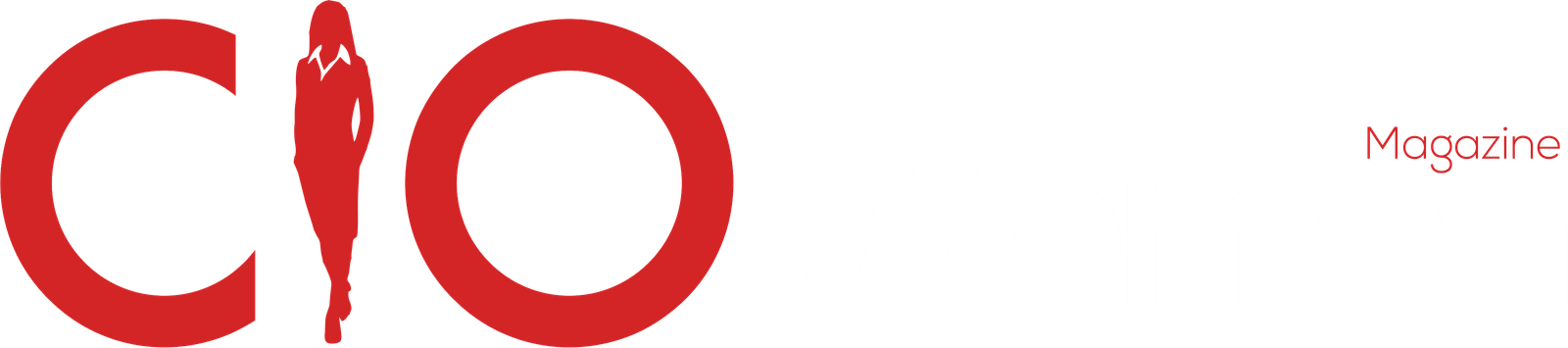

NVIDIA has unveiled the NVIDIA DGX GH200, a new large-memory AI supercomputer designed to support the development of next-generation models for generative AI language applications, recommender systems, and data analytics workloads.

Improved Memory Capacity

The DGX GH200 utilizes NVIDIA GH200 Grace Hopper Superchips and the NVIDIA NVLink Switch System to create a powerful computing platform. By combining 256 GH200 Superchips using NVLink interconnect technology, they function as a single GPU, delivering 1 exaflop of performance and 144 terabytes of shared memory. This represents a significant increase in memory capacity compared to the previous generation DGX A100, which was introduced in 2020.

Significance of generative AI

Jensen Huang, NVIDIA’s CEO, highlighted the importance of generative AI, large language models, and recommender systems in driving the modern economy. He stated, “DGX GH200 AI supercomputers integrate NVIDIA’s most advanced accelerated computing and networking technologies to expand the frontier of AI.”

The GH200 Superchips feature an Arm-based NVIDIA Grace CPU combined with an NVIDIA H100 Tensor Core GPU in the same package, connected by NVLink-C2C chip interconnects. This eliminates the need for a traditional CPU-to-GPU PCIe connection, significantly increasing bandwidth by 7x compared to the latest PCIe technology. The new architecture also reduces interconnect power consumption by over 5x and provides a 600GB Hopper architecture GPU building block for DGX GH200 supercomputers.

Grace Hopper Superchips

The DGX GH200 is the first supercomputer to combine Grace Hopper Superchips with the NVIDIA NVLink Switch System, allowing all GPUs in a DGX GH200 system to work together seamlessly. In contrast, the previous generation only allowed eight GPUs to be combined with NVLink without sacrificing performance. The DGX GH200 architecture provides 48x more NVLink bandwidth than its predecessor, offering the power of a massive AI supercomputer with the simplicity of programming a single GPU.

First In Line Tech Giants

Leading technology companies such as Google Cloud, Meta, and Microsoft will be among the first to gain access to the DGX GH200 for exploring its capabilities in generative AI workloads. NVIDIA also plans to share the DGX GH200 design as a blueprint with cloud service providers and hyperscalers, enabling further customization to suit their infrastructure needs.

NVIDIA HGX H100 | The most powerful end-to-end AI supercomputing platform

NVIDIA Helios Supercomputer

The announcement of the NVIDIA Helios supercomputer was also made, which will be powered by four DGX GH200 systems interconnected with NVIDIA Quantum-2 InfiniBand networking. NVIDIA Helios aims to supercharge data throughput for training large AI models and is expected to be operational by the end of the year, supporting the research and development efforts within NVIDIA.

The DGX GH200 supercomputers offer a fully integrated and purpose-built solution for handling large models. NVIDIA software, including NVIDIA Base Command and NVIDIA AI Enterprise, provides a turnkey, full-stack solution for AI and data analytics workloads. NVIDIA Base Command offers AI workflow and cluster management, while NVIDIA AI Enterprise includes over 100 frameworks, pre-trained models, and development tools for streamlined development and deployment of production AI applications.

When will it be Available?

The availability of the NVIDIA DGX GH200 supercomputers is expected by the end of the year, signaling a significant advancement in AI computing capabilities. With its groundbreaking features, the DGX GH200 has the potential to drive innovation and accelerate breakthroughs in various industries, empowering researchers, developers, and data scientists with unparalleled computing power and memory capabilities.